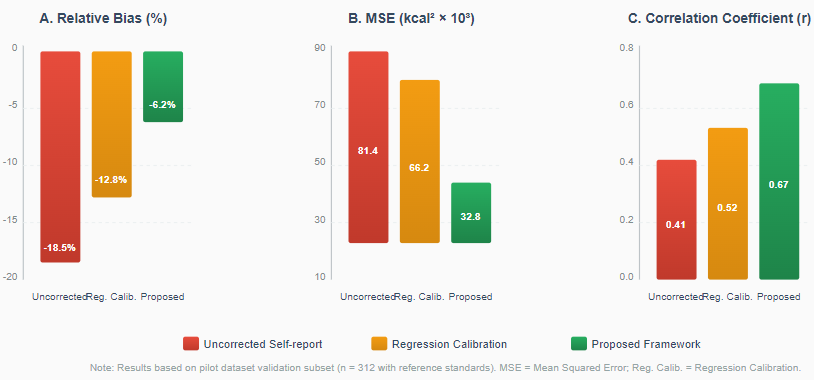

Measuring dietary exposure is the key aspect of nutritional epidemiology in order to find cause and effect relationships between nutrition and long-term illnesses. Nevertheless, self-reported nutrition assessment tools e.g. food frequency questionnaires and dietary recalls provide systematic underreporting as well as random error in nutrition assessment that considerably reduce the regression coefficients of exposure-outcome relationships and even obscure true diet-health effects under measurement errors. The current corrections methods conducted on small reference samples and depending on the assumptions of linearity are capable of treating variations of errors in multimodal data of great dimensions. We are going to present an idea of self-supervised multimodal representation learning, that is, an error-reducting dietary exposure measure, where dietary text logs and wearable sensor data are modeled jointly and learns discriminative features highly correlated with true intake through cross-modal contrastive learning and masked reconstruction, trained over multi-view representations to produce an exposure-corrected dietary energy and nutrient consumption estimate using a unified latent space, and produce an exposure-corrected dietary text log estimate using a unified latent space.