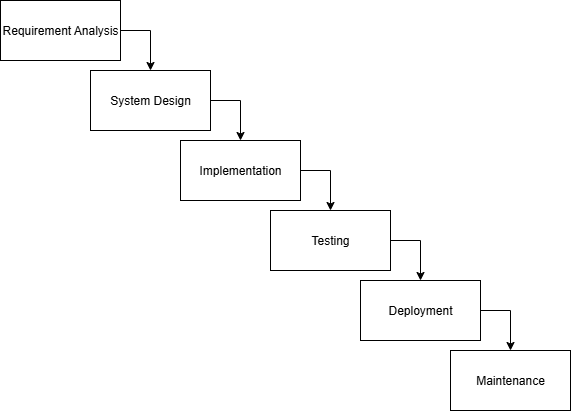

The contemporary software development landscape is characterized by a proliferation of methodologies, yet academic discourse predominantly centers on the examination of individual models in isolation, rather than undertaking holistic comparative analyses. This gap highlights the need for a structured evaluation of different approaches to guide practitioners in selecting optimal models for diverse project requirements. This study systematically categorizes and compares various software development models—including flow-based, structured, iteration-based, object-oriented, and composite models—to assess their flexibility, risk management, expertise requirements, and applicability across project sizes and environments. Employing a literature review approach, the research analyzes existing models (e.g., Waterfall, Agile, DevOps) and evaluates them across eight critical dimensions: flexibility, risk, time, expertise, project size, customer involvement, delivery frequency, and quality assurance mechanisms. The findings reveal that agile models (e.g., Scrum, XP) excel in flexibility, customer engagement, and iterative delivery, making them ideal for dynamic projects. Traditional models (e.g., Waterfall) suit stable, small-scale projects but lack adaptability. High-risk projects benefit from Spiral and MDDF, while DevOps and Crystal methodologies balance structure and flexibility. The study underscores the growing trend toward flexible, collaborative approaches in modern software development, emphasizing the importance of context-specific model selection to enhance efficiency and outcomes.